Building Blocks of Computing: An In-Depth Look at Computer Architecture

From Basic Terms to Complex Models: Everything You Need to Know

Computer architecture is the conceptual design and fundamental operational structure of a computer system. It serves as the bridge between the software and hardware, defining how the system executes instructions, handles data, and communicates internally and externally.

Basic terms in computer architecture

In computer arhcitecure following terms are often used it is best to understand them earlier

1. Throughput

Throughput refers to the amount of work or the number of instructions a computer system can process in a given period. It is often measured in instructions per second (IPS) or more specifically in millions of instructions per second (MIPS) or billions of instructions per second (GIPS). High throughput indicates that the system can handle a large volume of tasks efficiently. Throughput is influenced by various factors such as the clock speed of the CPU, the efficiency of the instruction pipeline, and the ability to manage multiple tasks simultaneously (parallel processing).

2. Instructions

Instructions are the basic operations that a CPU can perform, defined by the instruction set architecture (ISA). Each instruction typically includes an operation code (opcode) that specifies the operation to be performed (e.g., add, subtract, load, store), and operands, which are the data on which the operation is performed. Instructions are stored in memory and fetched, decoded, and executed by the CPU in a cycle known as the instruction cycle.

3. Decoding

Decoding is the process by which the CPU interprets or "decodes" the instruction fetched from memory to determine what operation needs to be performed. The instruction is analyzed by the control unit, which interprets the opcode and directs the CPU to execute the appropriate action. Decoding is a critical step in the instruction cycle, as it converts the binary instruction into signals that guide the other components of the CPU, such as the ALU, to perform the desired operation.

4. Store

In computer architecture, the term "store" refers to the operation where data is written to a memory location. This data can be the result of a computation, an intermediate value, or information received from an input device. The store operation is an essential part of the CPU's interaction with memory, ensuring that processed data is saved for future use or output. For example, after a calculation, the result might be stored in a specific address in memory so it can be accessed later by other instructions.

5. Fetch

Fetching is the first step in the instruction cycle, where the CPU retrieves an instruction from memory. The address of the instruction to be fetched is usually held in a special register called the program counter (PC). The fetch operation involves transferring the instruction from the memory location to the CPU's instruction register. After fetching, the instruction is then ready to be decoded and executed. Fetching is a repetitive process that occurs continuously as the CPU runs through a program.

6. Instruction Address

An instruction address is the specific memory location where an instruction is stored. The instruction address is typically stored in the program counter (PC) during the fetch phase of the instruction cycle. The CPU uses this address to locate the next instruction to execute. After fetching an instruction, the program counter is usually incremented to point to the address of the next instruction in the sequence, unless the instruction being executed is a jump or branch that alters the flow of the program.

7. Memory (RAM)

Random Access Memory (RAM) is the main memory of a computer where data and instructions are stored temporarily while a computer is running. RAM is volatile, meaning that all data is lost when the power is turned off.

8. Bus

A bus is a communication pathway that connects different components of a computer, such as the CPU, memory, and input/output devices. The bus transfers data, instructions, and control signals between these components.

9. ALU (Arithmetic Logic Unit)

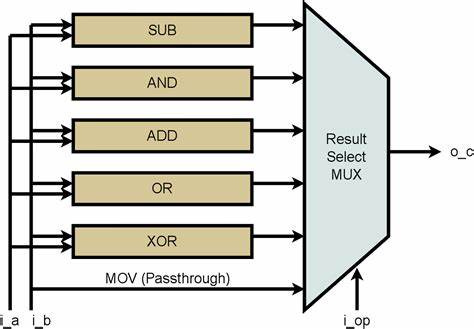

The ALU is a component of the CPU that performs arithmetic operations (like addition and subtraction) and logical operations (like AND, OR, and NOT). It is essential for executing instructions that involve calculations or decision-making.

10. Program Counter (PC)

The Program Counter is a special register in the CPU that holds the address of the next instruction to be executed. It automatically increments after each instruction is fetched, ensuring that instructions are executed in sequence.

11. Opcode

An opcode (operation code) is part of an instruction that specifies the operation to be performed by the CPU. For example, an opcode might instruct the CPU to add two numbers or to move data from one location to another.

12. Operand

An operand is the part of a computer instruction that specifies the data on which the operation is to be performed. For example, in the instruction "ADD A, B," A and B are operands.

13. Instruction Set

An instruction set is the complete collection of instructions that a CPU can execute. It defines the operations that the CPU can perform, such as arithmetic operations, data movement, and control flow.

14. Fetch-Decode-Execute Cycle

The fetch-decode-execute cycle is the basic operational process of a CPU. It involves fetching an instruction from memory, decoding it to determine what action is required, and then executing the instruction.

15. Stack

A stack is a data structure used in computer memory where data is stored and retrieved in a last-in, first-out (LIFO) manner. Stacks are often used for managing function calls and storing temporary data.

Click for detailed explanation

16)Control Unit

The Control Unit is the part of the CPU that orchestrates the actions of the computer’s hardware components to execute instructions from a program. It doesn't perform any actual data processing tasks (those are handled by the Arithmetic Logic Unit, or ALU) but rather coordinates the various parts of the computer system to ensure that instructions are carried out correctly and efficiently.

Functions of the Control Unit:

Instruction Fetching: The Control Unit retrieves (fetches) the next instruction to be executed from the computer’s memory. The address of the instruction to be fetched is stored in the Program Counter (PC).

Instruction Decoding: Once an instruction is fetched, the Control Unit decodes it to determine what operation is to be performed. This involves interpreting the opcode (operation code) of the instruction, which tells the CPU what type of operation (e.g., addition, subtraction, load, store) to perform.

Controlling Data Flow: The Control Unit manages the data flow between the CPU, memory, and input/output devices. It ensures that the right data is moved to the correct place at the correct time, whether it's transferring data to the ALU for processing or storing the results back in memory.

Generating Control Signals: The CU generates specific control signals that activate the various components of the CPU and the other parts of the computer. These signals ensure that the operations specified by the decoded instruction are carried out in the correct sequence.

Managing Execution of Instructions: The Control Unit directs the execution of instructions by guiding the ALU, registers, and other components in performing their designated tasks. It ensures that each step of the instruction cycle (fetch, decode, execute) is performed in the correct order.

Handling Interrupts: The Control Unit can also handle interrupts—signals that indicate that an external or internal event needs immediate attention. When an interrupt occurs, the CU temporarily suspends the current instruction, processes the interrupt, and then resumes normal operation.

Computers are based on different models 2 famous ones are

Von Neumann

Harvard Architecture

a)Von Neumann:

The Von Neumann architecture is a design model for a stored-program digital computer. It describes a system where the computer's memory stores both instructions (software) and data (the information that the instructions act upon)..

This model is also called stored program computer.

It has 2 properties

1)Stored Program

Instrcutions are stored in a unified linear memory array.

Memory is unified between data and instructions

Interpertation of control values depend on control signals

2)Sequential Instruction Processing

Only 1 instruction is processed at a time.

Program counter identifies current instrcution

Program counter is advanced sequentially except for control transfer instructions.

Address of current instrcution is stored in program counter

Processer fetches 1 instrcution a time

Decodes and executes the instruction

Move onto next one

How does this model works?

Fetch: The control unit fetches the next instruction to be executed from the memory location pointed to by the program counter (PC). The instruction is then stored in the instruction register (IR).

Decode: The control unit decodes the fetched instruction to understand what actions need to be performed. This step involves determining the type of instruction (e.g., an arithmetic operation or a data transfer) and the operands involved.

Execute: The control unit sends the appropriate signals to the ALU and other parts of the CPU to perform the operation specified by the instruction. If the instruction involves data, the relevant data is either fetched from memory or written back to memory.

Store: The result of the execution (if any) is stored back into memory or a register. The program counter is then updated to point to the next instruction, and the cycle repeats.

Limitations:

The shared bus between the program memory and data memory leads to the von Neumann bottleneck, the limited throughput (data transfer rate) between the central processing unit (CPU) and memory compared to the amount of memory. Because the single bus can only access one of the two classes of memory at a time, throughput is lower than the rate at which the CPU can work. This seriously limits the effective processing speed when the CPU is required to perform minimal processing on large amounts of data. The CPU is continually forced to wait for needed data to move to or from memory.

b)Harvard Architecture

The Harvard architecture is a computer architecture that physically separates the storage and handling of instructions and data. This means that the system has two different memory areas:

Instruction Memory: Stores the program's instructions.

Data Memory: Stores the data that the program manipulates.

In Harvard architecture, there are two distinct memory units:

Instruction Memory: This memory stores only the instructions that the CPU will execute. Because it is separate from data memory, the CPU can fetch instructions without interference from data accesses.

Data Memory: This memory is used exclusively for storing data. It is separate from instruction memory, which allows the CPU to read and write data independently of instruction fetching.

Harvard architecture utilizes two separate buses:

Instruction Bus: Used to fetch instructions from the instruction memory.

Data Bus: Used to read and write data from/to the data memory.

Having separate buses allows for concurrent access, which can enhance the overall performance of the system.

How Harvard Architecture Works

In Harvard architecture, the CPU can fetch an instruction and access data at the same time, thanks to the separate instruction and data buses. This concurrency reduces the bottleneck seen in Von Neumann architecture, where the CPU must alternate between fetching instructions and accessing data.

Connect with Me:

LinkedIn: Rana Umar Nadeem

Medium: @ranaumarnadeem

GitHub: ranaumarnadeem/HDL

Substack: We Talk Chips

Tags: #DigitalLogic #CombinationalLogic #Adders #Decoders #Encoders #Mux #Demux #Subtractors #Multipliers #Verilog #HDL #DigitalDesign #FPGA #ComputerEngineering #TechLearning #Electronics #ASIC #RTL #Intel #AMD #Nvidia#substack #github #DFT #DLD #Digital logic#sequential logic #medium #moorelaw #FSM #Von_neumann #harvard #fetch #ISA #RISCV

![[Computer Architecture] Computer Memory - Cấu trúc của bộ nhớ máy tính ... [Computer Architecture] Computer Memory - Cấu trúc của bộ nhớ máy tính ...](https://substackcdn.com/image/fetch/$s_!j1sT!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F97cd6ae7-e05d-4c85-b5c1-d3392527e965_2560x1481.png)